Data in a VMware or other Virtual machine environments is ‘complex’

One of the reasons we move to virtual machines is to consolidate and maximize CPU utilization – rather than 20 physical machines each averaging ‘10%’ CPU utilization, we consolidate into a single larger machine and aim to have our CPU utilised at 80-90%.

This model works fine for most day to day transactional processing needs.

Yet behind these virtual machines could be Terabytes of data.

Come the start of the nightly backup and our Physical Server hosting our VM’s will be found desperately wanting for CPU cycles to process 20 concurrent backups.

i.e. We now know there was some value in having ‘surplus’ CPU cycles spread across our 20 old physical machines.

VM Backup

There are 4 main methods for performing Virtual Machine Backup:

1) Install BackUp Agents on each VM

2) Treat the Hypervisor (ie the system the VM’s run within) as a ‘LINUX’ machine and backup the Virtual File structures representing the VM’s themselves

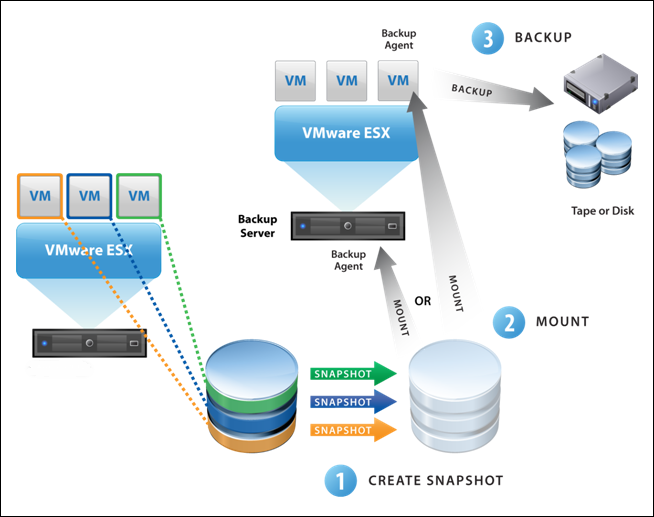

3) SAN or NAS SnapShots

4) Vendor Specific backup API’s (eg VCB for VMware)

VMWare VCB BackUp method

The key considerations in all cases are:

Does My Backup have ‘Application Integrity’

and

Does the Backip format allow me to Recover ‘fast enough’

and

In recovering a single Server; I will in fact hope to recover 4 to 20 ‘machines – potentially simultaneously

Some VM backup techniques work at a wholesale ‘disk image’ perspective and can be efficient during the backup process, but then require an entire disk volume to be restored OR re-presented for restore.

If all you require is a ‘file restore’ or a single database table, these ‘wholesale disk image’ methods can, at times, be cumbersome and costly to operate.

Balancing these various methods, and then ensuring VM Backup does not then introduce a 2nd complex element into your existing Physical backup regime is a challenge and may require significant scripting to glue disparate systems together.

CD-DataHouse starts with an ‘Application and Data’ analysis, firstly to gauge the various ‘BackUp’ window challenges, Recovery Requirements (RPO and RTO) and then to produce a proposal based around various technology offerings and customer reference calls to help our clients assess the most cost effective options.