Admin

Latest posts by Admin (see all)

- The SAN Storage Trinity - October 13, 2017

With Storage, the same design challenge always applies – balancing

- Point 1: Terabyte Capacity

- Point 2: IOP’s Performance – meaning true Random 4KB 75% Read / 25% Write ‘type’ IOPs

- and Point 3: Cost

The above three form the holy trinity of a ‘basic’ Storage Design

Tailored SAN Design

But now lets add other critical aspects which we need to tailor the storage to each customers taste.

Overall System Availability

- Do we need a Single Controller / Single Node or Multiple Controllers to handle the failure of a single controller

- In essence 99% of storage proposed is dual controller, unless we are talking about archive systems that could afford to be down for a day or so

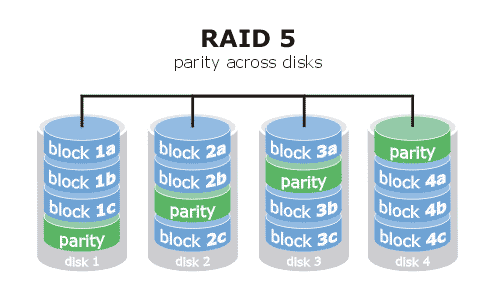

Data/Disk Redundancy

- RAID 1/10, RAID5 or RAID 60

- But once we make this selection, we immediately need to revisits Point 2

- Our RAID Selection will critically impact our Write performance

- and in RAID 10, it will at least halve our overall capacity – so we need to revisit Point 1

- and now we need to Revisit Point 3

Compromise

At this point, we either have to:

- add more Disks to increase capacity and IOPs

- moved to SSD Disks for Performance

- or taken another approach of adding a larger number of 7.2K RPM disks to achieve the same level of IOPS

RAID

Introducing RAID, we have likely satisfied our ‘need’ to handle failure of 1, 2 or more disks

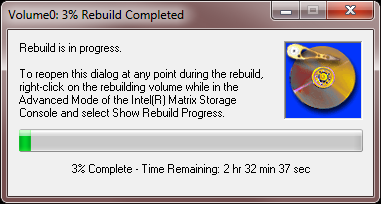

- But a failed disk in a RAID needs to be re-built and that rebuild time can grow exponentially

- Plan for 60-70-80 hours for a Rebuild of a 6TB drive in a RAID 6 array

RAID Rebuild Times

Now we have introduced a new problem

- Its possible the RAID rebuild time – and having our data at risk during this rebuild process does not meet our needs

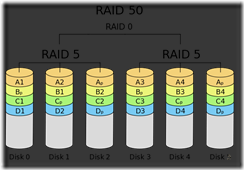

- OK – Lets go back and revisit RAID again – and maybe RAID 50, or RAID60 could alleviate some of the statistical problems associated with disk failure (ie spread, but not eliminate the risk)

Rackspace and Power Costs

While all of this is going on, the number of disks in our system has likely grown and grown

- Now we have a new problem

- We’re quickly running out of rackspace

- (let alone power or heat issues)

- (let alone power or heat issues)

Storage design was never meant to be easy – its an iterative process, where each change has a knock on effect to each an every other parameter.

In Closing

Most small businesses visit this challenge once every 4 years.

Its an impossible decision to make and we often see over spend anywhere in the order of 30-40%.

This is justified by: ‘I can always use plenty of extra capacity’.

My take: You’ve likely burnt 30K GBP, 50K GBP , 100K or 200K GBP more than you needed to.

That spend could be put to improving your security, network or data protection position.

If you’re in the middle of a storage refresh and the deciding factor is how much discount you are about to receive, I hope the points above offer some guidance.